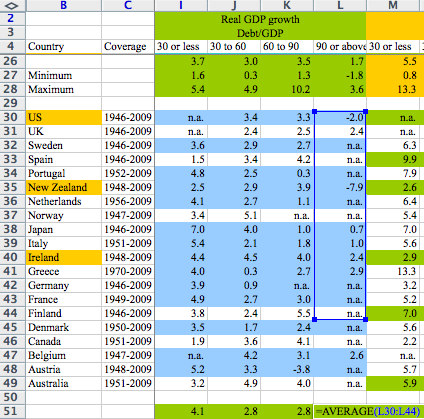

Last week, I commented on a prominent data analysis blunder in Excel. Even if this particular problem seems to result from the spreadsheet's design, anybody doing data analysis has experienced coding errors, no matter the tool. That doesn't mean that nothing can be done to reduce their occurrence. Phil Price shared some tips on avoiding data problems, mostly for R users. Here are some other tips with more emphasis on Excel:

- Make checklists. This might sound unimpressive but checklists have been used in aviation for decades and recent research suggests they have a great potential to improve safety in surgical procedures. If I am going to use some data pipeline repeatedly (perhaps because I am doing a pilot study or interim analysis or because I am going to analyze samples from several countries), I write down a list of the main steps in the analysis and then go through them one-by-one, checking them off as I go.

- Document everything. This is a general principle in software engineering and also the idea behind the tedious “method” sections in scientific publications but keeping track of what you did is very helpful when you want to do something similar or to diagnose an error some time after the initial analysis. Just like checklists, documentation doesn't need to be extraordinarily complicated. In Excel, I usually add a special worksheet named “Notes” containing a few sentences on the origin of the data and, when relevant, a code book for the main variables. It's not much trouble when creating the file, but it can literally save you hours of work if you ever need to come back to an old project after several months.

- Stick to the pipeline (using a checklist!). If I pulled some data from a database, merged it with some other data entered in Excel, exported everything to a statistical package and only then noticed a mistake, I won't correct it manually. Instead, I will create a corrected version of the original data file and update all intermediate steps. The idea is that having incoherent versions of a data set lying around make it more likely to copy some result from the wrong version or loose track of what I did.

- Use “version control”. For R or perl scripts, I use an actual version control system (subversion or git). I don't bother doing that for Excel workbooks (mostly because the “diff” function is not nearly as useful as with plain text files) but when I try a new analysis or add some data to an older one, I don't erase the original workbook, I create a new one (often with a name like XXXX-20130301). This helps keeping track of what I did or diagnosing problems down the line. If I extracted some result to put it in a report, I am able to find out where it came from even if I added data later on. Comparing versions also sometimes helps noticing or understanding problems (e.g. if some summary statistics do not change at all between versions, there might be some issues in the formulas).

- In Excel, keep the data in a separate worksheet and avoid touching them after the initial import. In particular, I avoid sorting records or recoding variables by search and replace. Instead, I create new columns or secondary worksheets. This makes formulas longer but ensures that I won't dissociate rows or accidently overwrite data. This also makes it easier to compare different Excel workbooks and the original data source if needed.

- When reusing a worksheet, start by “invalidating” it. What I mean by this is marking everything that needs to be updated. For example, when I want to analyze some new data following a procedure I used before, I first copy the workbook, color everything in red and then start updating cells, turning them back to their original color as I go. This technique can actually save you some time when you are in a hurry because you can then simply copy a workbook and focus on what you need right now. Since you are not working on the original file and the other worksheets are clearly marked as “invalid”, you don't need to worry about checking everything right away. Even if you can't update everything, you won't leave your workbook in an incoherent state and you will know where the new and where the old results are.

- Double-check everything. If there are several routes to get a result, code several of them and compare. For example, the sum of row totals, column totals and a grand total computed directly from the original table should all be identical, etc. This might sound like a waste of time but when you find embarrassing mistakes after working on a data set for days, you realize how easily stupid mistake can slip through. For results that will end up in a publication, I strive to run the main analysis from the ground up in two different statistical packages (e.g. R and SPSS) when possible. The goal is not so much to check for bugs in the packages themselves but to expose coding or scripting errors on my part. Obviously, this can be very time-intensive, not so much because of the analyses themselves (if you decided what you want to do and know how to do it, it can be very fast) but because you will invariably run into puzzling differences that take a long time to understand (rounding errors, hidden defaults, slightly different formulas for what you thought were identical tests, etc.) You do learn a lot about your tools in the process, though.

- Use tables (also known as lists). I don't use them very often, but I though I should mention tables anyway because they directly address the issue in the Reinhart-Rogoff Excel worksheet. In a table, variables can be manipulated as such, just like in a regular statistical package, providing something close to the “data frame” construct in R.